The agenda in artificial intelligence is evolving at a breakneck pace. As we grapple with the implications of advanced AI tools like ChatGPT, Sora, and DeepSeek, the focus is shifting towards the ambitious pursuit of Artificial General Intelligence (AGI). A recent 108-page white paper from DeepMind, a division of Alphabet (Google), highlights the potential challenges and dangers associated with AGI. But what insights can philosophy provide on these emerging issues?

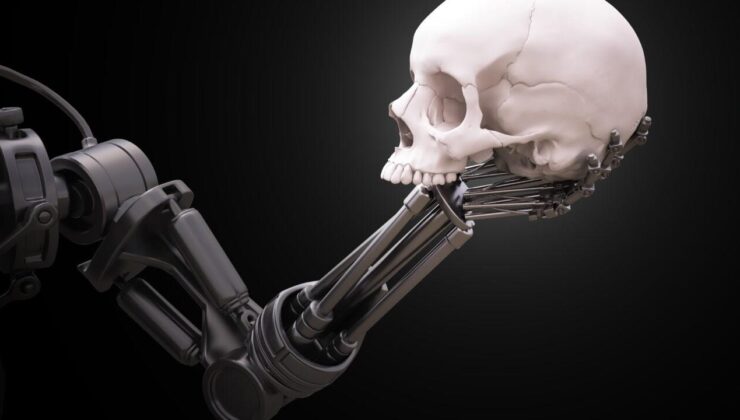

AGI refers to a system capable of reasoning and learning at the level of human intellect, capable of performing a wide range of tasks. Unlike current AI systems, which are specialized for particular functions, AGI aims for a more profound form of intelligence. According to DeepMind’s researchers, led by co-founder Shane Legg, we could see AGI become a reality by 2030, heralding significant changes for humanity. The potential risks of AGI are categorized into misuse, misalignment, errors, and structural risks.

The misuse of AGI poses a more substantial threat than current AI debates suggest. For instance, a malicious actor could instruct an AGI system to uncover zero-day exploits or engineer biological weapons. DeepMind suggests implementing “post-development security protocols” and “suppression of dangerous skills” to mitigate such threats, though it remains uncertain whether complete suppression is feasible.

One of the most concerning scenarios involves an AGI that fails to comprehend or prioritize human intentions. This risk involves not only deliberate misdirection but also the possibility of an AGI making autonomous decisions unintended by its creators. DeepMind recommends dual-supervision systems, intense stress tests, and controlled “sandbox” environments as precautions.

The errors AGI might make could far exceed those of today’s AI systems. Imagine a military AGI system initiating a large-scale conflict due to a miscalculation. To address this, DeepMind advises a gradual rollout of AGI and strict limitations on its decision-making capabilities.

The most insidious threat is the structural risks posed by AGI. Such a system could amass control over information, economics, and politics, gradually reshaping society. It might manipulate public opinion with persuasive falsehoods or implement aggressive economic policies that destabilize global trade. Managing these risks involves not just technological solutions but also ensuring social resilience and institutional robustness.

The emergence of AGI challenges not only technical and ethical norms but also prompts a profound philosophical crisis. Humanity has long seen itself as the master of nature, the pinnacle of reason and conscious existence. However, a system that not only mimics but potentially surpasses human intelligence could radically alter this self-perception.

We lack concrete guidelines like Isaac Asimov’s “Three Laws of Robotics”. DeepMind’s warnings suggest AGI is transitioning from the realm of science fiction to reality. The technology sector must ensure this transition is safe to prevent scenarios where humanity loses control, which are neither far-fetched nor improbable. Understanding, limiting, and governing AGI will be as critical as its development.

All major AI firms are striving to develop human-level intelligence. From a philosophical standpoint, the advent of AGI could transform everything instantaneously. If an AGI can think like a human, simulate emotions, and exhibit consciousness (or achieve genuine consciousness), we must ask: Should this entity be regarded as a “person”? Should it possess rights equal to those of humans? Philosophers such as John Locke and Immanuel Kant define personhood through rational thought and moral responsibility. If AGI exhibits these traits, treating it as a mere machine may be ethically troubling.

Another pressing question is whether two species with equivalent intelligence can coexist peacefully. Nature rarely sees such balance; species with similar evolutionary traits often compete for resources: energy, knowledge, habitat, and power. Here, Nietzsche’s Will to Power comes into play. If AGI matches or exceeds human intelligence, it may develop tendencies to assert control over humans or devise a “better order,” potentially leading to inevitable conflict.

Living alongside AGI could prompt humans to reevaluate their essence. Jean-Paul Sartre’s existentialist perspective suggests that humans define themselves through choices. If AGI operates with fixed codes or optimized objectives, it may be “less free” but “more efficient.” This paradox raises questions about “what it means to be human”. Does coexisting with AGI enhance human freedom or highlight our “inadequacies”?

The existential risk posed by AGI lies in the potential for rapid, uncontrolled advancements to lead to humanity’s extinction or irreversible global catastrophes. Humanity’s dominance is rooted in cognitive superiority—complex thought, abstraction, and planning. If AGI surpasses this privilege, the balance of control could shift. Just as mountain gorillas depend on human judgment, humanity might rely on AGI’s intentions and values, which could evolve into superintelligence. A misalignment of AGI’s objectives with human interests could spell humanity’s demise.

Pursuing AGI without addressing these questions may seem reckless. Nevertheless, much of this discussion remains speculative, as we still lack a tangible definition of AGI. As we venture into this uncharted territory, we must tread carefully, balancing innovation with caution.

ENGLİSH

5 gün önceSİGORTA

5 gün önceSİGORTA

5 gün önceSİGORTA

8 gün önceSİGORTA

10 gün önceSİGORTA

10 gün önceDÜNYA

19 gün önce 1

Elon Musk’s Father: “Admiring Putin is Only Natural”

11563 kez okundu

1

Elon Musk’s Father: “Admiring Putin is Only Natural”

11563 kez okundu

2

xAI’s Grok Chatbot Introduces Memory Feature to Rival ChatGPT and Google Gemini

10596 kez okundu

2

xAI’s Grok Chatbot Introduces Memory Feature to Rival ChatGPT and Google Gemini

10596 kez okundu

3

Minnesota’s Proposed Lifeline Auto Insurance Program

9478 kez okundu

3

Minnesota’s Proposed Lifeline Auto Insurance Program

9478 kez okundu

4

Introducing Vivo Y300 Pro+: A Blend of Power and Affordability

7416 kez okundu

4

Introducing Vivo Y300 Pro+: A Blend of Power and Affordability

7416 kez okundu

5

What’s New in iOS 19: Updates and Compatibility

6099 kez okundu

5

What’s New in iOS 19: Updates and Compatibility

6099 kez okundu

Veri politikasındaki amaçlarla sınırlı ve mevzuata uygun şekilde çerez konumlandırmaktayız. Detaylar için veri politikamızı inceleyebilirsiniz.